AdaBoost approach to MNIST

Three days ago, I trained a CART classification tree model on the MNIST data set. I submitted its predictions to the Digit Recognizer competition on Kaggle, which uses this data set. The submission received an accuracy score of 0.86346.

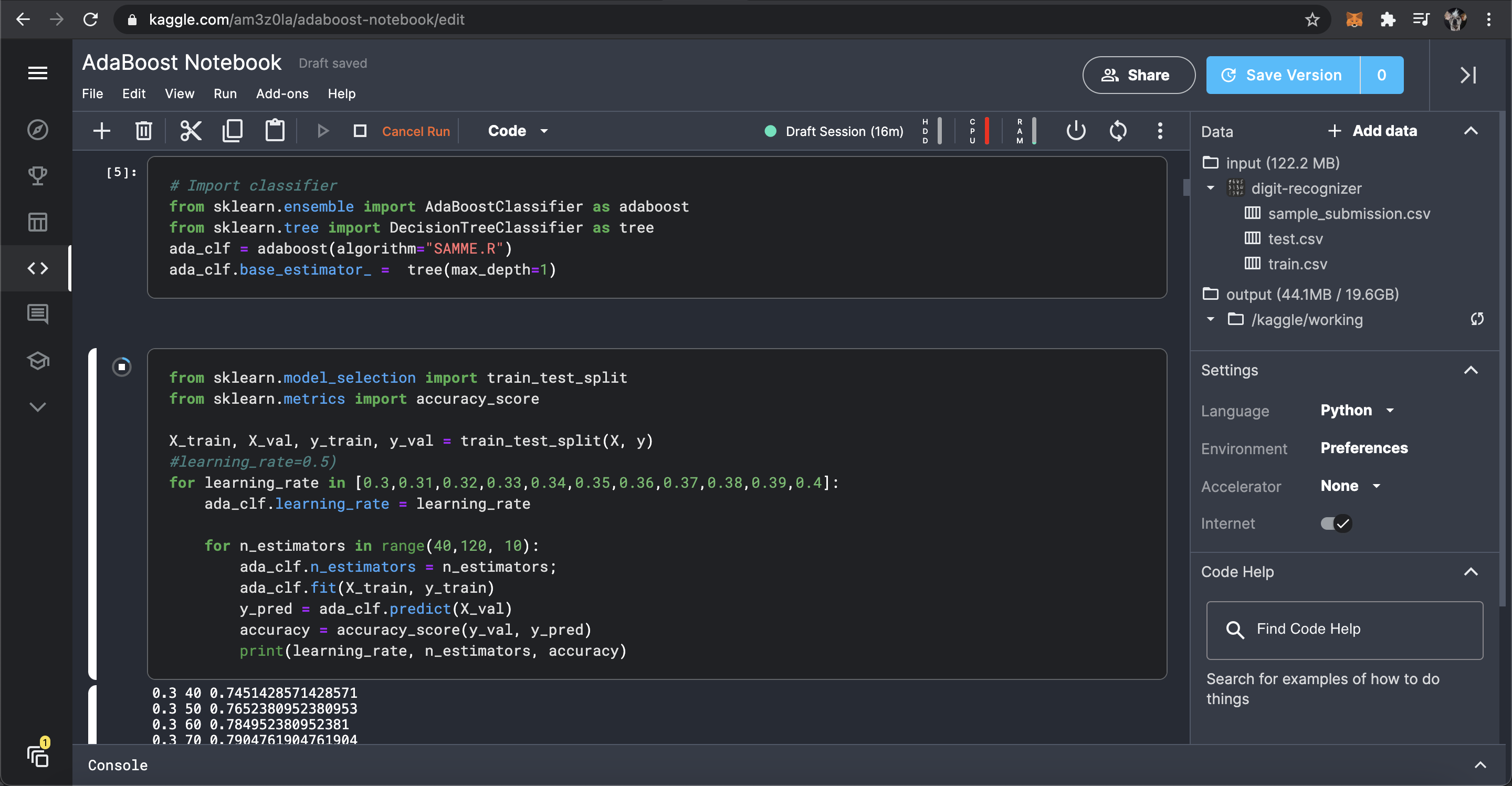

So, I was expecting for this AdaBoost model that I’m training today to perform better; its base algorithm is also a classification tree. Surprisingly, it hasn’t received an accuracy score greater than 0.81 on the training set. I think this indicates that tree-based algorithms do not perform well on this data set.